Buzzword

11 November 2022

The timeline of my experience at Durhack

10:39 - The beginning

Today I’m taking part in Durhack, the Durham University Hackathon and I’m determined to make the dumbest project at the hackathon. My vague idea is a project with the name of Buzzword. It’s a distributed-first, scalable programming language with built-in automatic differentiation for Machine Learning on the Blockchain. Yup.

10:53 - The group that uses Rust

I have just met a group being led by a Rust programmer, the rest of the group is new to Rust. I inquire about this and remark that it takes a lifetime (pun-intended) to understand the Rust borrow-checker.

10:57 - Doubly linked lsits

Today I learned about Doubly Linked Lists in Rust. Gnarly.

use std::rc::Rc;

use std::cell::RefCell;

pub struct List<T> {

head: Link<T>,

tail: Link<T>,

}

type Link<T> = Option<Rc<RefCell<Node<T>>>>;

struct Node<T> {

elem: T,

next: Link<T>,

prev: Link<T>,

}11:04 - A profound realisation

I realise that if I spend my entire time writing about Durhack, I will not have time to do any programming.

11:06 - The introductory talk commences

12:03 - A smart idea

I decide to stop writing and start programming.

12:04 - Scratch that

I realise that I need to program in Solidity, but I do not know how to program in solidity. But I have 24 hours to work this out, I think.

12:05 - Intermission

I will see if I can find any food and after that I will start programming, I promise.

12:08 - Disappointment

I have been informed that the Subway order has been delayed. I will begin programming albeit hungry and a bit sad.

12:18 - I need a plan

I think the most important part of this project is getting machine learning done on the blockchain. So I’ve made the decision to postpone the language implementation until later.

I started defining the necessary data types to build an API for Neural Networks but I’m wondering if I even need to do this. My idea is that I can start with classical Machine Learning techniques and potentially add Neural Networks if I have time. My only concern with Neural networks is the backpropogation step, I want to keep my project simple so I don’t make a mistake and spend more time debugging.

I’ll keep the API in a separate file but I’ll focus my efforts on getting a proof of concept first.

12:33 - Another realisation

I am learning Solidity at the same time as building a relatively non-trivial project in it. I am currently working out how to read an array of integers from a string.

12:53 - Progress??

I am learning a lot about solidity and I think I’ve just read a list of integers from a string?? My code compiles, which I did not expect.

12:57 - ???

I feel like a Boomer. I think I just deployed some code to the blockchain?

12:59 - ?????

I ran my code and it did not error?

13:00 - Progress!

I can read strings into integer arrays and store them on the blockchain! It only took 27 minutes, 66 lines of code and countless lookups to the solidity reference document.

pragma solidity ^0.8.7;

contract Buzzword {

bytes1 constant ZERO = '0';

bytes1 constant NINE = '9';

int[] xs;

int[] ys;

int[] work;

function loadData(string memory xstring, string memory ystring) public payable {

bytes memory xbytes = bytes(xstring);

bytes memory ybytes = bytes(ystring);

// Work on the assumption that there is at least one item in the arrays

xs = readIntegerArray(xbytes);

ys = readIntegerArray(ybytes);

}

function testXsContainsData() public view returns (int) {

return xs[0];

}

function readIntegerArray(bytes memory bArray) private returns (int[] memory){

work = new int[](0);

// Work out how many numbers there are

uint numIntegers = 0;

for (uint index = 0; index < bArray.length; index++) {

if (bArray[index] == ";") {

numIntegers++;

}

}

// The beginning of the number begins at

uint bArrayIndex = 0;

for (uint index = 0; index < numIntegers; index++) {

// Read the sign

int sign;

if (bArray[bArrayIndex] == "+") {

sign = 1;

}

else if (bArray[bArrayIndex] == "-") {

sign = -1;

}

else {

require(false);

}

// Increment the string index

bArrayIndex++;

uint number;

// Read numbers until the termination character

while (bArray[bArrayIndex] != ";") {

number *= 10;

bytes1 digitByte = bArray[bArrayIndex];

require(ZERO <= digitByte && digitByte <= NINE);

uint8 digitValue = uint8(digitByte) - uint8(ZERO);

number += digitValue;

bArrayIndex++;

}

work.push(int(number) * sign);

}

return work;

}

}I am not looking forward to implementing any sort of machine learning.

13:06 - Problems

I will probably want to perform Machine Learning on datasets where the data contains more than a singular integer feature. I can read an array of integers but I will probably want to read arrays of tuples of integers. But I can worry about this later.

13:14 - The state of my browser tabs

I currently have 17 solidity-related browser tabs open and because I am not a heathen, this makes me anxious.

13:15 - Basic machine learning

I think I’ll take a small break before I start with something small. My plan is to build a linear regression model and train it using gradient descent.

13:22 - Getting started

Break over. Machine Learning time.

13:39 - Lunch

I re-started my break and ate Durhack-provisioned Subway. I rate the food quite highly, I was satisfied.

13:48 - Pain

It would be really usefuly if solidity had a library for automatic differentiation. It is starting to feel like the language wasn’t made for machine learning. I’m taking a break and I will think about my plan moving forward.

14:02 - Thoughts

I know from my econometrics book that the OLS estimate for the univariate model, (Y = \beta_0 + \beta_1 X) takes on the following form.

\[ \begin{align} \beta_0 &= \bar{X} \\ \beta_1 &= \frac{\text{Cov}(X, Y)}{\text{Var}(X)} \end{align} \]

For now I will use this shortcut and potentially move onto gradient descent later. There are many similar shortcuts like this since I can use references to find the derivatives for some common loss and activation functions.

14:06 - Pain

Solidity does not support floating point numbers. This is disasterous.

14:18 - Acceptance

I have sped-run the stages of grief and I am embracing fixed-point arithmetic. What could go wrong.

An aside

I had two ideas of re-implementing floating point arithmetic in Solidity or creating a rational number datatype and implementing the arithmetic operations myself. There are also some libraries for floating point arithmetic in Solidity so I am considering potentially using those in the future.

14:21 - Disaster

Solidity does not actually support fixed-point arithmetic. The language documentation states that fixed-point numbers can be declared but cannot be assigned to. This is horrific.

14:24 - Weighing up my options

I now have a few options. I can use a hardened and tested library for floating-point arithmetic in Solidity which would make my life easier or I could create a hack to represent rational numbers.

\[ \dots \]

14:25 - Rational numbers

So, I can represent a rational number as a pair of integers

\[ \mathbb Q := \{ (p, q) : p, q \in \mathbb Z \} \]

Define equality as the following relation

\[ R = \{\left<(p_1, q_1), (p_2, q_2)\right> : p_1 q_2 = q_1 p_2\} \]

The reader can verify that this indeed defines an equivalence relation and returns expected results.

It is currently 14:31 so I won’t spend time formalising my construction but the definition of addition and multiplication comes naturally if you interpret \((p, q)\) as representing the fraction \(\frac{p}{q}\).

15:09 - ???

I went to test my linear regression code. Loading the values worked fine after I fixed a bug (try and find it in the code above!) but when I ran the code to train the model, the Remix IDE crashed.

15:21 - Trying again, minor fixes

So I tried running the linear regression with smaller values, the IDE didn’t crash and the machine did in fact learn but it had learned the function \(y = 2\) for the data \(X = [1, 2, 3], Y = [3, 5, 8]\). Immediately I was able to spot some minor bugs in my code. There’s actually a glaring major mistake in what I wrote above for the OLS estimate for \(\beta_0\). And secondly, I made an subtle error in my training code where I trained a model to predict \(X\) using as the predictor \(X\). There was also another mistake where I calculated the variance wrong and another mistake where I

\[ \dots \]

15:46 - More problems

Firstly, the Remix IDE appears to be very prone to crashing. I keep getting the following error.

[21067:1119/154525.567488:ERROR:v8_initializer.cc(714)] V8 javascript OOM (Reached heap limit).Note that this is almost entirely my fault. My implementation of the Rational numbers is extremely inefficient. And I create new rationals for every operation I complete.

15:56 - …

I have sat here poking at my code for 10 minutes and I don’t think I can do anything about having the world’s worst replacement for floating point numbers. I’m very close to a proof of concept, the smart contract loads the data, trains the model then runs out of memory during the prediction step.

16:09 - A terrible mistake

I’ve been calculating the covariance wrong. Just entirely wrong. I tried to make an optimisation but it was just not correct. Essentially I calculated the following

\[ \sum_ {i = 1}^ n \frac{(x_i - y_i)^2}{n} \]

I actually want the following

\[ \sum_ {i=1}^n \frac{(x - \bar{x}_i)(y-\bar{y}_i)}{n} \]

16:19 - Success!

I gave Buzzword the data ((X = [1, 2], Y = [2, 4])), I then asked it to train the linear regression model. I gave it the value 1 and asked it to predict a result and it returned 2. Nice!

I gave it 2 and asked it to predict a result and it returned 4. Cool!

I gave it 3 and asked it to predict a result and it returned 6. Awesome!

I checked the internals and it has learned the function \(Y = 2X\) or more precisely \(Y = \frac{0}{262144} + \frac{32768}{16384}X\) which computes the same function.

Here’s my code so far.

pragma solidity ^0.8.7;

contract Buzzword {

bytes1 constant ZERO = '0';

bytes1 constant NINE = '9';

int[] xs;

int[] ys;

int[] work;

int result;

Rational linearRegressionBias;

Rational linearRegressionWeight;

struct Rational {

int p;

int q;

}

function rationalEquals(Rational memory left, Rational memory right) private returns (bool) {

return left.p * right.q == left.q * right.p;

}

function rationalAdd(Rational memory left, Rational memory right) private returns (Rational memory) {

return Rational(left.p * right.q + right.p * left.q, left.q * right.q);

}

function rationalNegate(Rational memory rational) private returns (Rational memory) {

return Rational(-rational.p, rational.q);

}

function rationalSubtract(Rational memory left, Rational memory right) private returns (Rational memory) {

return rationalAdd(left, rationalNegate(right));

}

function rationalMultiply(Rational memory left, Rational memory right) private returns (Rational memory) {

return Rational(left.p * right.p, left.q * right.q);

}

function rationalSquare(Rational memory rational) private returns (Rational memory) {

return rationalMultiply(rational, rational);

}

function rationalInvert(Rational memory rational) private returns (Rational memory) {

return Rational(rational.q, rational.p);

}

function rationalDivide(Rational memory left, Rational memory right) private returns (Rational memory) {

return rationalMultiply(left, rationalInvert(right));

}

function normalise (Rational memory rational) private returns (Rational memory) {

return Rational(rational.p / rational.q, 1);

}

function integerToRational (int number) private returns (Rational memory) {

return Rational(number, 1);

}

function uintegerToRational (uint number) private returns (Rational memory) {

return integerToRational(int(number));

}

function leastSquaresLoss (int[] memory yacc, int[] memory yhat) private returns (Rational memory) {

require(ys.length == yhat.length);

Rational memory n = uintegerToRational(ys.length);

Rational memory loss = integerToRational(0);

for (uint index = 0; index < ys.length; index++) {

Rational memory actual = integerToRational(yacc[index]);

Rational memory predicted = integerToRational(yhat[index]);

Rational memory deviation = rationalSubtract(actual, predicted);

loss = rationalAdd(loss,

rationalDivide(

rationalSquare(deviation),

n

)

);

}

return loss;

}

function trainLinearRegressionModelWithOls() public {

Rational memory Y_bar = integerToRational(0);

Rational memory X_bar = integerToRational(0);

Rational memory X_squared_bar = integerToRational(0);

Rational memory B1;

Rational memory B0;

Rational memory Cov_XY = integerToRational(0);

require(xs.length == ys.length);

// Calculate B0 = X_bar

// And B1 = Cov(X, Y)/Var(X)

Rational memory n = uintegerToRational(xs.length);

for (uint index = 0; index < xs.length; index++) {

// Contributing to the mean of X

Rational memory x = integerToRational(xs[index]);

Rational memory y = integerToRational(ys[index]);

X_squared_bar = rationalAdd(

X_squared_bar,

rationalDivide(rationalSquare(x), n)

);

X_bar = rationalAdd(

X_bar,

rationalDivide(x, n)

);

Y_bar = rationalAdd(

Y_bar,

rationalDivide(y, n)

);

}

for (uint index = 0; index < xs.length; index++) {

Rational memory x = integerToRational(xs[index]);

Rational memory y = integerToRational(ys[index]);

Rational memory contribution = rationalDivide(

rationalMultiply(

rationalSubtract(x, X_bar),

rationalSubtract(y, Y_bar)

),

n

);

Cov_XY = rationalAdd(

Cov_XY,

contribution

);

}

// Calculate the variance of X

Rational memory Var_X = rationalSubtract(X_squared_bar, rationalSquare(X_bar));

B1 = rationalDivide(Cov_XY, Var_X);

B0 = rationalSubtract(

Y_bar,

rationalMultiply(B1, X_bar)

);

linearRegressionBias = B0;

linearRegressionWeight = B1;

}

function predict(int x) public payable {

result = normalise(

rationalAdd(

linearRegressionBias,

rationalMultiply(

linearRegressionWeight,

integerToRational(x)

)

)

).p;

}

function predictionResult() public view returns (int) {

return result;

}

function loadData(string memory xstring, string memory ystring) public payable {

bytes memory xbytes = bytes(xstring);

bytes memory ybytes = bytes(ystring);

// Work on the assumption that there is at least one item in the arrays

xs = readIntegerArray(xbytes);

ys = readIntegerArray(ybytes);

}

function testXsContainsData() public view returns (int) {

return xs[0];

}

function readIntegerArray(bytes memory bArray) private returns (int[] memory){

work = new int[](0);

// Work out how many numbers there are

uint numIntegers = 0;

for (uint index = 0; index < bArray.length; index++) {

if (bArray[index] == ";") {

numIntegers++;

}

}

// The beginning of the number begins at

uint bArrayIndex = 0;

for (uint index = 0; index < numIntegers; index++) {

// Read the sign

int sign;

if (bArray[bArrayIndex] == "+") {

sign = 1;

}

else if (bArray[bArrayIndex] == "-") {

sign = -1;

}

else {

require(false);

}

// Increment the string index

bArrayIndex++;

uint number;

// Read numbers until the termination character

while (bArray[bArrayIndex] != ";") {

number *= 10;

bytes1 digitByte = bArray[bArrayIndex];

require(ZERO <= digitByte && digitByte <= NINE);

uint8 digitValue = uint8(digitByte) - uint8(ZERO);

number += digitValue;

bArrayIndex++;

}

// Skip the final termination symbol

bArrayIndex++;

work.push(int(number) * sign);

}

return work;

}

}16:45 - A pretty picture

I spent 20 minutes making this graph to explain what Buzzword has done. I am so proud of my creation.

18:04 - Break over

I’ve just finished an hour break. I’m going to make a promotional webpage.

18:07 - Another project?

I found the following [1] blog post a few days ago that used the

Salesforce CodeGen model to predict the probability that a specific

token follows given the previous sequence of text. The model determined

that the probability of the return appearing in the context

that it did was low, correctly inferring that there was a bug. This gave

me the idea of building a tool like Grammarly but for your code. The

author doesn’t detail how they did it, but I can work it out.

- AI Found a Bug in My Code, Joel Einbinder

19:17 - A difficulty

My measly 24.9GB of RAM and swap is not enough to run the Salesforce Codegen model. This is a problem.

19:19 - My options

I can either use a smaller model or I can use Google Colab to run computation then export the results to an external application that I’ll create. I prefer the latter idea.

19:37 - Another idea??

I had the idea that I can simulate a Turing machine using GitHub actions with a new commit generated for every step of computation. I like this idea because it is stupid and there is also a prize for the best use of GitHub. I think I’ll enter as a contestant.

19:44 - GitHub repository created

My GitHub repository is live at https://github.com/dignissimus/github-actions-turing-machine/

19:51 - Deciding on the structure of the project

So I want to store the definition of the turing machine somewhere which should be ok. Then I also need to store the state of the turing machine. I left my Theory of Computation books at home but I should be good using Wikipedia as a reference.

19:53 - Another realisation

I have an assignment for Discrete Maths due in on Monday. I was organised and mostly completed the assignment in advance. But on the final question I used an inductive proof, I haven’t shown the base case because it required some manipulation. I will start this sometime soon.

22:00 - Slideshow Karaoke

I’ve finished watching Slideshow Karaoke, an awesome event. And I’ve done my discrete maths as well.

22:15 - Project Structure

I think I will create a file called state to store the

state of the turing machine. To keep things standard, I’ll give the

Turing machine a binary alphabet (0 and 1) and a single tape for input

and output. These choices do not matter for the computational power of

the Turing machine.

The state file will contain 3 lines.

The first line will contain a sequence of 0s 1s. Initially, the tape is filled with 0s. These can be stored implictly.

The second line will contain a number, this represents the location of the read-write head on the tape. This number takes on a value from the natural numbers ((\mathbb N = {0, 1, 2, \dots})).

The third line will again contain a number. This number will index the state that Turing machine is currently in.

There will also be a machine file. Each line in this

file will define a state for the turing machine. The first line is the

starting state.

Each state-definition will contain 2 sections. The first section defines the action of the turing machine on input 0. The second section defines the action of the turing machine on input 1.

A section defines what the machine outputs, the direction to move the read-write head and the state to transition to.

As an example I will give a definition for the 3-state busy beaver champion.

State

0

0

0Machine

1.R.1,1.L.2

1.L.0,1.R.1

1.L.1,1.L.HIn fact, at the start of computation the state file can be initialised to three zeros for any turing machine.

22:41 - GitHub actions

I don’t exactly know how GitHub actions work, but that’s ok. I will learn.

22:43 - Turing machine status

I’ve decided that I’ll create a status file, its value will be

running, halt or start. A commit

that changes the contents of the status file to start will

begin to perform the steps of computation for the Turing machine.

23:59 - Midnight Pizza

I have been rewarded for my efforts with midnight peperoni pizza. I am happy.

01:10 - It works?

I was able to start the Turing machine and it correctly performed the first step of computation, but it seems to get stuck and not do anything afterwards.

To remedy this, I decided to set up a cron schedule to start the action every 5 minutes so it can perform the next step of computation.

Will this work? I will find out in 5 minutes.

01:25 - Seemingly no progress

The cron job does not seem to be running. however, I have another idea. I can run all the commits at once inside the action instead of running each commit in seperate action runs.

01:36 - Progress

The Turing machine completed three (!) steps before

crashing. I am very happy.

The error message is not that descriptive, it reads as follows

sed: -e expression #1, char 27: Invalid content of \{\}I’m able to deduce that the line causing the error is the following

# Replace the character under the read-write head with the output

# from the state

NEW_TAPE=$(echo $TAPE | sed -e "s/\(.\)\{$((LOCATION))\}.\(.*\)/\1$OUTPUT\2/")I’ve just noticed the problem. You might be able to notice that in the above image, the Turing machine moves one to the right. So now it’s in the second cell. Now it moves one to the left, it is back in the first cell. It then moves one to the left - it falls off the grid.

01:44 - Definitions

I’ve noticed that the example for the three-state busy beaver

champion uses a two-way infinite tape. Hm. When doing Computer Science

this doesn’t really matter because they are effectively equivalent. But

I am not doing Computer Science right now, I am doing Computer Hackery

and I am using sed to simulate a Turing machine. This

matters a lot.

01:48 - A hack

I can add a hack that goes like this: If the location of the read write head becomes negative, then I can prepend a character and pretend that the read-write head is at location 0.

02:06 - It worked too well

So the hack worked - sort of. It worked and kept on going. Then it

just kept on going and going and going. The Turing machine just didn’t

stop. The repository now has 163 commits.

02:41 - Tired

Oh my god I’m so tired.

03:10 - What have I done

The machine seems to have started itself while I wasn’t paying attention. The repository is now at 954 commits oh god.

Was it that cron schedule I wrote a while back?

03:12

We’ve hit 1,000! I need to turn this off somehow.

03:31 - It works!

There was some weird and subtle bug with one of my sed rewrite rules.

I fixed the bug and the Turing machine works in its entirety! I present

to you the three-state busy beaver champion, simulated using GitHub

actions and sed.

03:59 - The end

So I have about 8 hours left for the Hackathon but I think I’ll

finish here. I’ve had so much fun at Durhack, my first ever Hackathon.

07:32 - Renewed motivation?

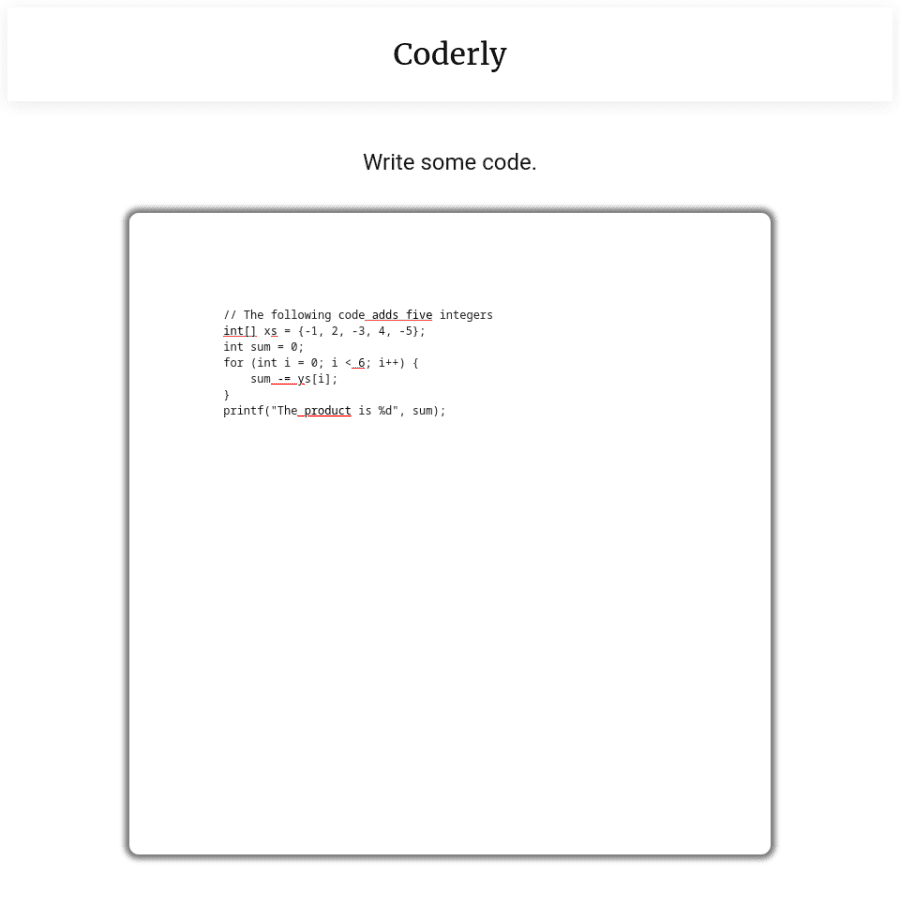

So, I stayed awake just walking around and chatting to people. I feel like working on Coderly. I have four and a half hours so I’ll see what I can do.

07:33 - Sample code

Below is the canonical source code I will be testing against. See if you can find the errors.

// Adds five integers

int[] xs = {-1, 2, -3, 4, -5};

int sum = 0;

for (int i = 0; i < 6; i++) {

sum -= ys[i];

}

printf("The product is %d", sum);07:36 - Opening up Google Colab

I’m going to load the 2B parameter model into Google Colab.

07:38 - Things are looking good

The model seems to load in Google Colab. This is god.

08:03 - Good news!

The model does what I want it to do! I’ll try scaling it to the 6BN model then export the data to process locally.

08:06 - Thank you Google

Google’s beefy servers allow me to download a 15GB model in just 4 minutes.

08:07 - Amending the sample code

I’ve noticed that the model has some implicit bias. It assumes the

language is not C and doesn’t expect to see int[]

// The following C code adds five integers

int[] xs = {-1, 2, -3, 4, -5};

int sum = 0;

for (int i = 0; i < 6; i++) {

sum -= ys[i];

}

printf("The product is %d", sum);08:11 - Model’s downloaded!

Yay!

08:11 - Colab instance crashed!

So I only have about 12GB RAM available on my Google Colab instance. The 6B model seems to be too much. Back to 2B I go!

08:16 - Data downloaded!

Hacking time!!

08:53 - The end!

Proof of concept done! Writeup finished. Here’s the final result.

17:58 - Round up

I’ve had such an amazing time. I was a finalist so I got to demonstrate my project and I ended up being a runner-up which won me a shiny new keyboard and QRT also gave me some sweet bottles for which I am very grateful for.

My GitHub actions Turing Machine also won me an award for most creative use of GitHub. This has been a superb weekend.